The Azure Vault, PGP, and other matters Part 1

Let’s step back into cloud space for a moment. I had this challenge to see if I could do some decrypting of partner files. A simple matter, well yes and no (it does have some interesting parts to deal with).

First off, the files encrypted here are with PGP. Now this type of file protecting is not hard once you’ve gained an understanding of how to create and use keys. I’m not going to go into the deep end around PGP. There’s plenty of that for you to find on the internet. But I will need to mention a matter or two about how PGP key files are formatted and how they are used by some common PGP Nuget packages (more on that in a bit).

Back up in the cloud for a little while….

My design for getting encrypted partner files, decrypted them, and finally passing them along to downstream systems is simple for the most part. I use an Azure Logic App (a.k.a. a cloud work-flow) to handle the file movements and calling functions to decrypt (there are couple of Azure Functions to deal with the PGP Keys and Decrypting).

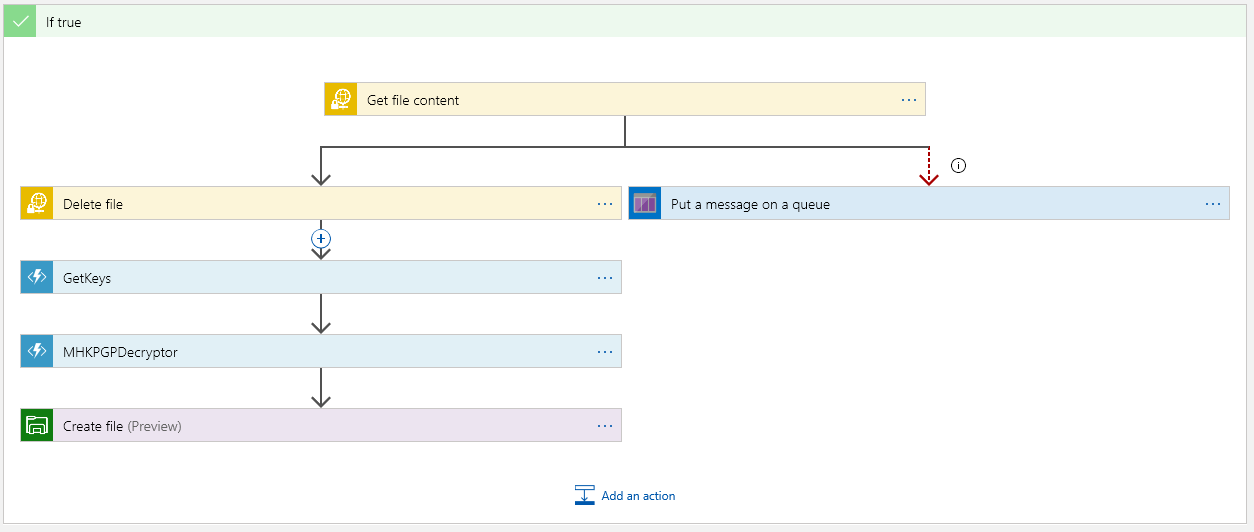

So the general over all design is something like this:

- Logic App triggered on a new file showing up on an sFTP site. The trigger runs on a timed interval.

- Next, the Logic App brings in the file content and removes the file from the sFTP site.

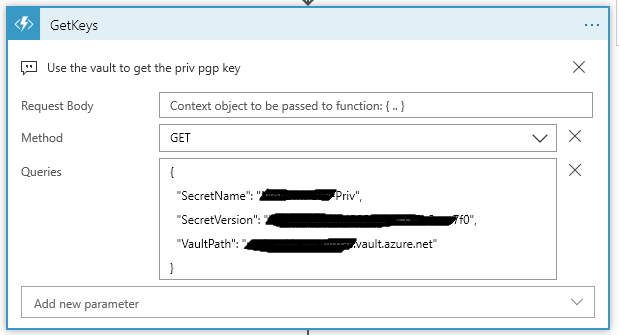

- We then get the decrypting key from a secrets store using an Azure Vault. This is done in a Azure Function.

- Once we have a key and file content, we call another Azure Function to decrypt the content.

- And finally, the decrypted content is pushed back out to a file.

Basic and mostly straight forward… Shall we begin….

Starting with our connector:

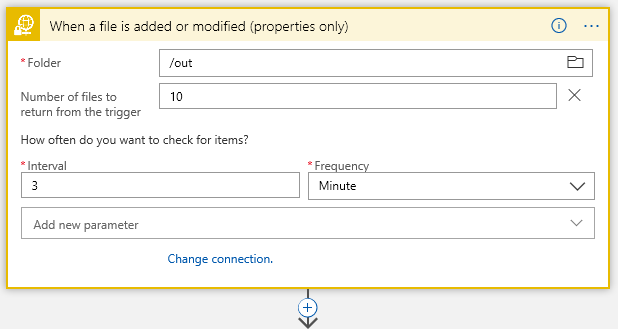

This is sFTP connector… I have /out folder where I start looking for files every 3 minutes..

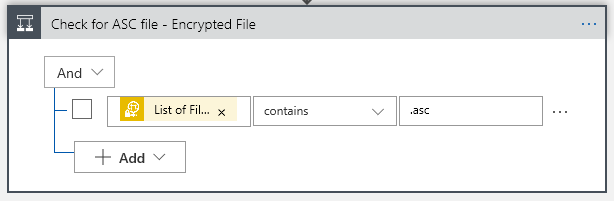

Next Action filter on the filename with a Control:

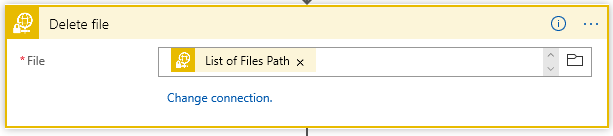

Now I know my files end in a .asc, so I will only grab those using the Control Conditional (yes, I probably should .Upper the filename to cover all bases, but one must leave room for improvements). That “List of Fil… btw is “List of Files Path” Dynamic content from the sFTP connector.

This gives us our True/False choices:

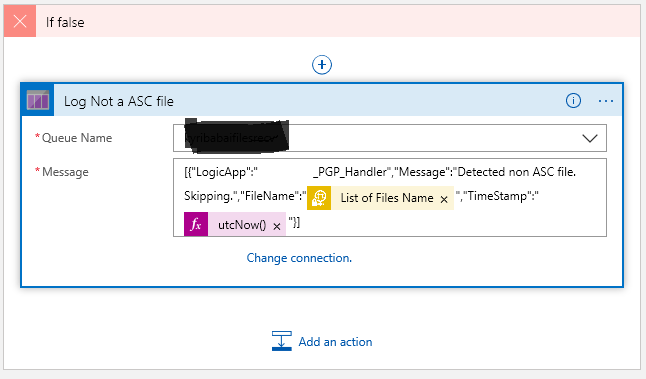

The “True” is where we want to go to do processing the file, of course. But what about the False side? We get here because, while we detected a file landing or changing on our sFTP folder, it did not meet our requirement that the filename contain a .asc. Big deal, right? Well, think about it. This may not be a problem, but if somewhere down the road one starts getting complaints about files not arriving… would it not be nice to have some historical trail when crap happened? At the end of the day the False side is optional, but why start out with throwing away events that may be important at some point?

This is where the cloud (Azure) makes life really easy… lets just push a false note to a Queue. Again, I’m not going to dive into creating a Storage account and creating a queue in that storage account – this is simple enough with a little reading and doing. But needless to say you will need a storage account and queue to do the following in the False:

Once you create a connection to your storage account, the available queues within that storage will show up in the drop down. Now what you push into the queue is up to your choice. Here I’m putting my False note into some jason. I’ve also added a couple of Dynamic values… the “List Of Files Name” gives us the the filename from sFTP, and then we get the system timestamp.

Now the True side is good bit more interesting of course, because there’s something to do:

The top block is where we must get the file content. This is encrypted as this point. Once we have the content, we should delete the file on the sFTP server:

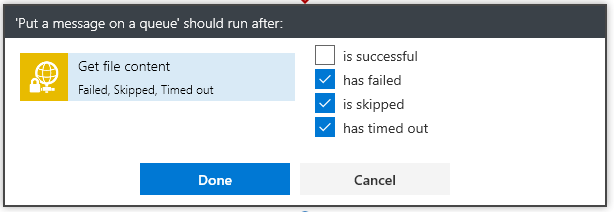

The “Put a message on the queue” side executes only if we cannot get the content. The “Run After” is set if Get Content “Has” anything except is successful so:

This way the App will not continue nor will it delete the file from the sFTP server.

Next we have the magic… Get the keys from Azure Vault:

Now setting up a secret in the vault comes down to several steps…. You must:

- Create a Key Vault

- Create a Secrets in the Vault using the PGP file as the Secret content.

- Provide access to the vault

The only step in building the vault that is tricky is knowing what to put in the secrets value to get PGP to work…

Consider the ascii format of a PGP key:

—–BEGIN PGP PRIVATE KEY BLOCK—– [CRLF]

Version: GnuPG v1.4.9(MingW32)[CRLF] – this line is optional

[CRLF]

<key detail> [CRLF]

<key detail2> [CRLF]

<key detail3> [CRLF]

[CRLF]

—–END PGP PRIVATE KEY BLOCK—-–

Nope, I’m not making that up…. PGP, and more precisely the NuGet Packages for handling PGP are fussy about how the PGP key file is formatted. Trust me the PGP code will not be able to find the key if you don’t get the format correct. And there lies the problem with the Azure Vault.

It’s not really a problem… if you understand what is going on. If one uses the Web Portal to insert the secrets value, then understand that the [CRLF] are stripped from the cut/paste of the value. Yes, PGP does not like that. So what is the trick? The part of the key file to paste into the secret value is:

<key detail> [CRLF]

<key detail2> [CRLF]

<key detail3> [CRLF]

The middle part of the key. One will find that the [CRLF] become a space (yes that is problem, but one that can be dealt with in the Azure Function :))

** I’m going to stop here for now… and make a Part 2 of this. We will pick up with the Azure Get keys function that deals with the PGP key from the secrets.

~SG.