-

21 Hours to Build: Here’s the Sessions List

The list:

Microsoft Build 2018 Seattle, WA May 7-9, 2018 will feature over 350 technical sessions and interactive workshops on Azure, Windows, Visual Studio, Microsoft 365, and more.#MSBuild -

Build 2018 Starts Tomorrow

-

Build 2018 – Nice topics this Year

From the Check it department… topics at this year’s Build.

Registration is now open for the Microsoft Build Live digital experience, May 7?9! Microsoft Build Live brings you live as well as on-demand access to three days of inspiring speakers, spirited? -

Twitter: build poll

A Twitter Poll concerning MS build 2018 event.

Check out @msdev’s Tweet: https://twitter.com/msdev/status/987380206179504128?s=09

-

New Year New Server

Yes indeed, a new year… and where have all the tech posts ? Let’s see, been way too busy moving, holidays, fixing and messing up my servers and my web sites and …

The short of it… my old web servers were slight damaged in the move… go figure. And it did take a bit getting all of that setup in the new lab.

Other changes:

Moved off of Go Daddy- the nickel and dime me got old. Moved to another registrar.

VM’ed my web servers- yes it’s time to move off the old servers and into the modern world. So obliviously we’re on bigger newer faster servers.

And as I rebuilt the sites, these were also moved to newer OS, etc etc.So yes, been busy getting the infrastructure back up and going..

Happy New Year!

A follow up- My KVM decided I did not fully understand that I need to use HBA configurated drives with using the ZFS file system. Needless to say, we had a power fail (I’ve since replace the failing UPS), and as a result the VM main drive decided to corrupt itself… and of course as I started to re-build the entire VM… one discovered that my only full Image of the VM was last done in November last year…. Good Grief….

So we are mostly back up… I’ve still two sites to go….

~SG

-

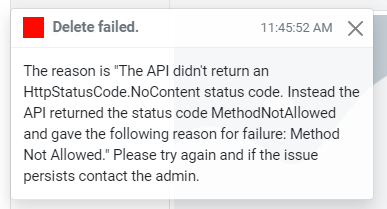

Dotnet 6 API with a HttpDelete and that 405 Method not allowed error

As we can tell from the title of the Post, we are going to talk about figuring out why in a client app one gets a Http Status code of 405 (Method Not Allowed).

When does this happen? Usually when a http request does a http PUT or DELETE. Yet, when one uses Swagger (openAPI) or Postman to directly call the same API Delete or Put, it works! This is starting to feel like a CORS issue, right? Read On ==>

So 405! Indeed looking at the surface of what is going on, one thinks Ah! CORS is at it again! Absolutely, this is what it feels like. After all, a Swagger/Postman GET/DELETE call under the same http domain works. But the moment one moves to a Client App coming in from a different domain (i.e. a different port on localhost, because different ports are also considered a different domain in terms of CORS), the Http PUT/DELETE stops working and throws a 405.

We are using VS2022 with Dotnet 6.0.100-rc.2.21505.57 (so the latest VS2022 and Dotnet RC 6 as of the writing of this post)

Wow… let’s step into how to rein in CORS…. We start in the API project in the Startup class.

In Configure Services =>

services.AddCors(options => { options.AddPolicy("CorsPolicy", builder => builder .AllowAnyOrigin() .AllowAnyHeader() .AllowAnyMethod() .WithMethods("GET","PUT","DELETE","POST","PATCH") //not really necessary when AllowAnyMethods is used. ); });We build a CORS policy (yes one can remove the .WithMethods clause)

And of course we add in the Configure method =>

app.UseRouting(); app.UseCors("CorsPolicy");And everything is right with the world… Let’s do a Delete in a client app. Meaning we are doing HttpClient Delete to a rest url that contains the id:

Yeap a hard 405… What the what What?

After digging through lots of readings on the topic of CORS 405 PUT/DELETE, DotNet 6 issues (with not much luck), I stepped upon the answer with a bit of thinking about the problem….In my Controller we have this as the delete method header =>

[HttpDelete("id")] public async Task<IActionResult> Delete(int id) { ...Seems simple enough, it works in the Swagger interface for testing the API. I validated that the client is using and passing the correct url and id. WHAT!?

Look closely at the [HttpDelete… tag. Notice anything? If one said, “Duh, ScottGeek- you need to wrap the “id” within {}” then YEAP!

That would be the issue. The real question is why does this seem work in the Swagger UI for the api but not in a app using HttpClient?I have absolutely no idea why (yes I could get Fiddler out and start digging deep, nope) In this case what seems like a CORS acting up issue (405 on a cross domain request), yeah it’s not, or is it? This seems to be a bug somewhere, I suspect, in the OpenAPI (swagger) bits. Why in the OpenAPI? because it should reflect the same 405 when the API Method tag is not 100% correct.

But let’s not just pick on OpenAPI. The fact that DotNet 6 allows both [HttpDelete(“id”)] and [HttpDelete(“{id}”)] without issuing some kind of build blowup or at least a green squiggle on the one that is in error… well I give it to ya… feels like a bug.

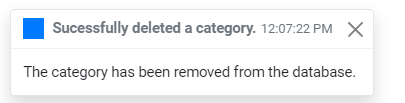

Put the {} around the id and….

Also, don’t forget to check one’s HttpPut as well…

~SG

-

Blazor with dotnet watch run in the Pre-Dotnet 6 and VS 2022 days

Oh, how we do wait for the release of dotnet 6 and most important VS 2022. Why? Well the promise of hot-reload without hoops to jump through.

For the most part in VS 2019 16.10.4, “hot-reload” of a Blazor app is not too bad <= Circle that “not too bad”- it does actually work… mostly! Yes, I do refer to the “dotnet watch run”. It does detect changes in your Blazor files and reload the browser page… yeah, BUT it does have issues. Here comes the list….

It doesn’t detect new files added… go ahead add a new razor page while your project is in “dotnet watch run” – nope it will not find it no matter how many times you hard refresh the browser. Not only razor pages, but new stylesheets- yeap, it’s not going to find those either!

It has issues when you make too many complicated changes in your code. Yes compile errors that don’t go away.

It interferes with an already “flaky” intellicode/intellisense… My goodness talking about annoying! Already half the time, bootstrap intellisense stops working on razor pages. And what the hell happened to code snippets? (Probably less about hot-reload than this version of VS being riddled with bugs!)

Ok, I’m mostly over that now…. deep breath… 1…2…3…4… Alright.

Let’s get to the point, the matter of always having to type in that “dotnet watch run”, stop it, and type it in again… what’s the point? Let’s create a launch profile so that we can tack this into VS (gee you think the Blazor Template should already have that? Yeap it should). Here we go:

"Watch": { "commandName": "Executable", "launchBrowser": true, "launchUrl": "http://localhost:5000/", "commandLineArgs": "watch run", "workingDirectory": "$(ProjectDir)", "executablePath": "dotnet.exe", "environmentVariables": { "ASPNETCORE_ENVIRONMENT": "Development" } }In your launchSettings.Json file, place the code above in the “Profile” section…. Match sure this is in the “PROFILE” section!

Now let the magic begin… well mostly… When you tap the “Watch” on the Build/Debug drop down… that lovely “dotnet watch run” will execute in a window… and now you to can experience the “buggy” hot-reload of a Blazor app. 😎

~SG -

Earth Day 2019

Happy Earth Day – remember, In the vast Universe… There be only one Earth!

Earth Day | Earth Day Network

Earth Day | Earth Day Network

Visit the official Earth Day site to learn about the world’s largest environmental movement and what you can do to make every day Earth Day. Together, we can end pollution, fight climate change, reforest the planet, build sustainable communities, green our schools, educate, advocate and take action to protect Earth.

Source: www.earthday.org/

-

The Azure Vault, PGP, and other matters Part 1

Let’s step back into cloud space for a moment. I had this challenge to see if I could do some decrypting of partner files. A simple matter, well yes and no (it does have some interesting parts to deal with).

First off, the files encrypted here are with PGP. Now this type of file protecting is not hard once you’ve gained an understanding of how to create and use keys. I’m not going to go into the deep end around PGP. There’s plenty of that for you to find on the internet. But I will need to mention a matter or two about how PGP key files are formatted and how they are used by some common PGP Nuget packages (more on that in a bit).

Back up in the cloud for a little while….

My design for getting encrypted partner files, decrypted them, and finally passing them along to downstream systems is simple for the most part. I use an Azure Logic App (a.k.a. a cloud work-flow) to handle the file movements and calling functions to decrypt (there are couple of Azure Functions to deal with the PGP Keys and Decrypting).

So the general over all design is something like this:

- Logic App triggered on a new file showing up on an sFTP site. The trigger runs on a timed interval.

- Next, the Logic App brings in the file content and removes the file from the sFTP site.

- We then get the decrypting key from a secrets store using an Azure Vault. This is done in a Azure Function.

- Once we have a key and file content, we call another Azure Function to decrypt the content.

- And finally, the decrypted content is pushed back out to a file.

Basic and mostly straight forward… Shall we begin….

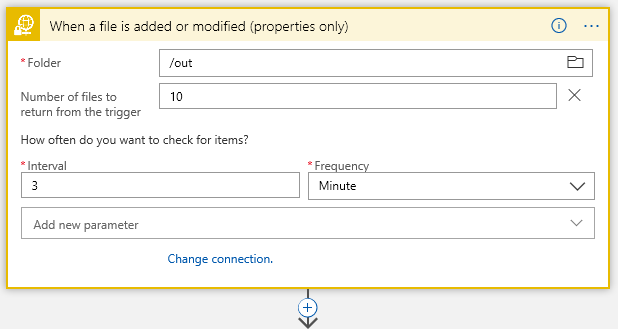

Starting with our connector:

This is sFTP connector… I have /out folder where I start looking for files every 3 minutes..

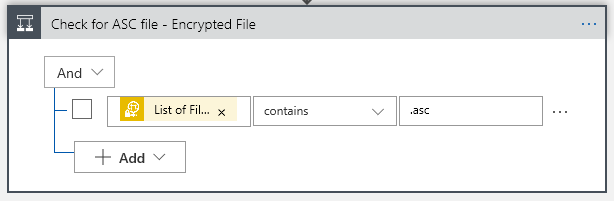

Next Action filter on the filename with a Control:

Now I know my files end in a .asc, so I will only grab those using the Control Conditional (yes, I probably should .Upper the filename to cover all bases, but one must leave room for improvements). That “List of Fil… btw is “List of Files Path” Dynamic content from the sFTP connector.

This gives us our True/False choices:

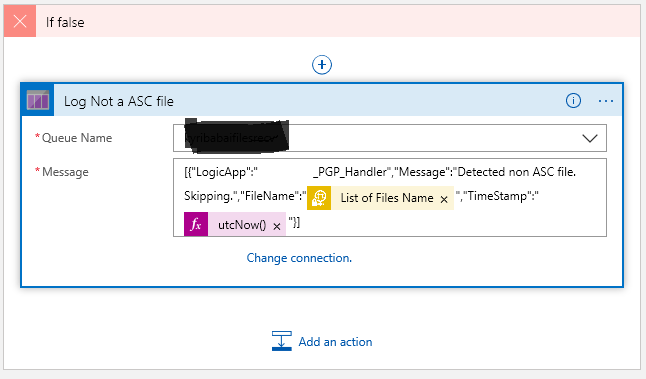

The “True” is where we want to go to do processing the file, of course. But what about the False side? We get here because, while we detected a file landing or changing on our sFTP folder, it did not meet our requirement that the filename contain a .asc. Big deal, right? Well, think about it. This may not be a problem, but if somewhere down the road one starts getting complaints about files not arriving… would it not be nice to have some historical trail when crap happened? At the end of the day the False side is optional, but why start out with throwing away events that may be important at some point?

This is where the cloud (Azure) makes life really easy… lets just push a false note to a Queue. Again, I’m not going to dive into creating a Storage account and creating a queue in that storage account – this is simple enough with a little reading and doing. But needless to say you will need a storage account and queue to do the following in the False:

Once you create a connection to your storage account, the available queues within that storage will show up in the drop down. Now what you push into the queue is up to your choice. Here I’m putting my False note into some jason. I’ve also added a couple of Dynamic values… the “List Of Files Name” gives us the the filename from sFTP, and then we get the system timestamp.

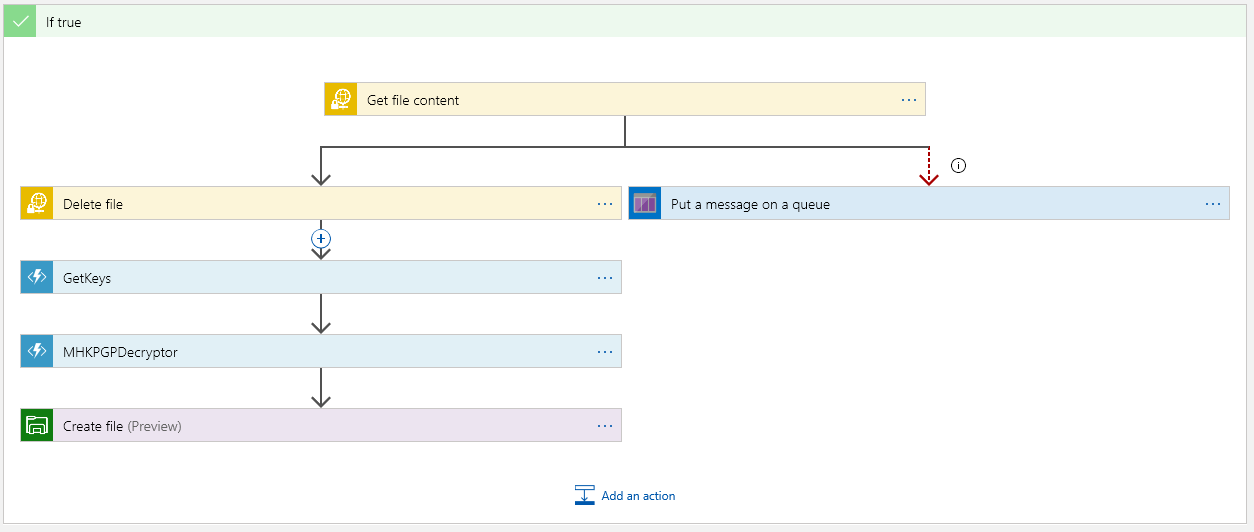

Now the True side is good bit more interesting of course, because there’s something to do:

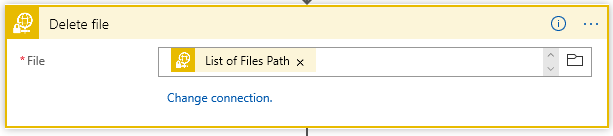

The top block is where we must get the file content. This is encrypted as this point. Once we have the content, we should delete the file on the sFTP server:

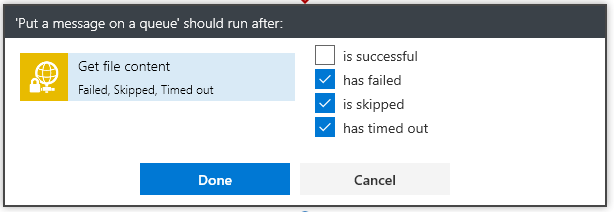

The “Put a message on the queue” side executes only if we cannot get the content. The “Run After” is set if Get Content “Has” anything except is successful so:

This way the App will not continue nor will it delete the file from the sFTP server.

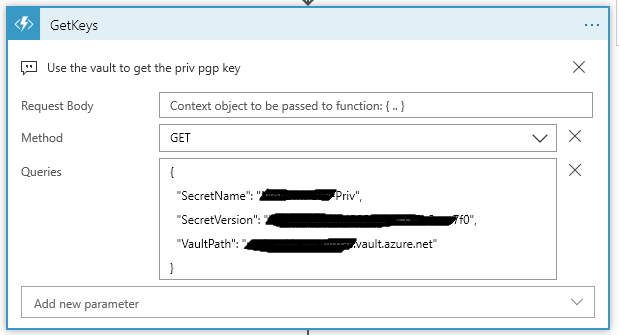

Next we have the magic… Get the keys from Azure Vault:

Now setting up a secret in the vault comes down to several steps…. You must:

- Create a Key Vault

- Create a Secrets in the Vault using the PGP file as the Secret content.

- Provide access to the vault

The only step in building the vault that is tricky is knowing what to put in the secrets value to get PGP to work…

Consider the ascii format of a PGP key:

—–BEGIN PGP PRIVATE KEY BLOCK—– [CRLF]

Version: GnuPG v1.4.9(MingW32)[CRLF] – this line is optional

[CRLF]

<key detail> [CRLF]

<key detail2> [CRLF]

<key detail3> [CRLF]

[CRLF]

—–END PGP PRIVATE KEY BLOCK—-–Nope, I’m not making that up…. PGP, and more precisely the NuGet Packages for handling PGP are fussy about how the PGP key file is formatted. Trust me the PGP code will not be able to find the key if you don’t get the format correct. And there lies the problem with the Azure Vault.

It’s not really a problem… if you understand what is going on. If one uses the Web Portal to insert the secrets value, then understand that the [CRLF] are stripped from the cut/paste of the value. Yes, PGP does not like that. So what is the trick? The part of the key file to paste into the secret value is:

<key detail> [CRLF]

<key detail2> [CRLF]

<key detail3> [CRLF]The middle part of the key. One will find that the [CRLF] become a space (yes that is problem, but one that can be dealt with in the Azure Function :))

** I’m going to stop here for now… and make a Part 2 of this. We will pick up with the Azure Get keys function that deals with the PGP key from the secrets.

~SG.

-

Using Kestrel for ASP Core Apps

As I was looking about with some of the Core 2.2 notes, I came across some interesting notes on how to tweak about with Kestrel settings….

I’m not sure I like putting Kestrel options up the in the Main Program builder. I tend to prefer these to collect in the startup class, but I get point of why Kestrel needs to be handled close to the Builder (it is after all the html service handling web calls).

Shall we begin…

So up in program.cs we do our normal Web Host Builder:public class Program { public static void Main(string[] args) { var host = CreateWebHostBuilder(args).Build(); MigrateDatabase(host); host.Run(); //CreateWebHostBuilder(args).Build().Run(); } private static void MigrateDatabase(IWebHost host) { using (var scope = host.Services.CreateScope()) { var db = scope.ServiceProvider.GetRequiredService<ApplicationDbContext>(); db.Database.Migrate(); } } public static IWebHostBuilder CreateWebHostBuilder(string[] args) => WebHost.CreateDefaultBuilder(args) .UseStartup<Startup>(); }This tends to be my way of doing Program.cs. Note I split out the Build part into a static method (the normal default way is to have one fluent state that does a build and run in single line- it’s that commented line in the Main method)

public static IWebHostBuilder CreateWebHostBuilder(string[] args) => WebHost.CreateDefaultBuilder(args) .UseStartup$lt;Startup>() .ConfigureKestrel(opts => { opts.Limits.MaxConcurrentConnections = 100; });In my example, the method I push Kestrel setting to would of course have the .ConfigureKestrel with it’s options settings…

Check it out… lots of interesting tweaks on settings…

You will find some details here on MS DOCS:

Learn about Kestrel, the cross-platform web server for ASP.NET Core.

- Hello 👋, how can I help you today?